Hogfish geocrawler - putting your website on the map

February 14, 2017

If you are reading this, you are probably a website administrator who found references to Hogfish in your web server logs. If you found this page by accident and want to find out more about web crawlers in general please read this wikipedia article. Below you can find some information specific to Hogfish geocrawler.

What is Hogfish?

Hogfish is a web crawler looking for pages with geospatial information. If it is crawling your site, chances are that you are hosting pages containing geotagged data: it might be a list of trails, parks, restaurants, buildings etc. After crawling your site Hogfish will store an URL of your page, location data, and a short description (approx. 250 characters) in its database, which is indexed by location (longitude and latitude).

Why do you need geotagged information?

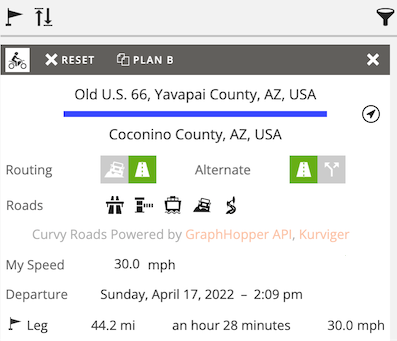

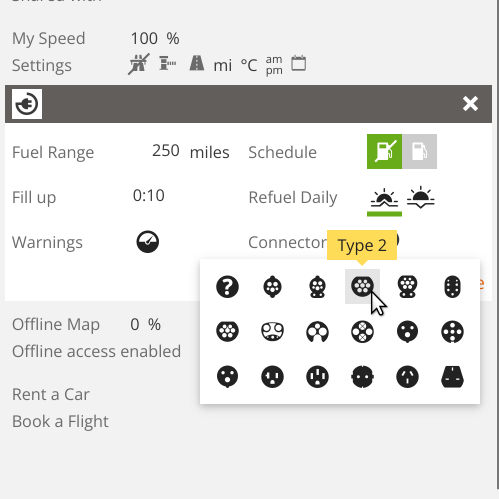

The Hogfish database and API is used by Furkot - online trip planner to display points of interest around the planned route. Furkot is a free application - if you have any concerns about how your data is used, please visit it and try searching for attractions.

What are the benefits of allowing my site to be crawled by Hogfish?

Furkot (and in future other websites and applications using the Hogfish database) will send more people to your site. In this regard it is similar to Google search results referring people to your site.

In addition to that, as the owner of the site that Hogfish crawled, you can get preferential access to the Hogfish API, for example to implement geo aware search on your site. Please contact us, and once we establish you are the owner of the site, we will give you access to the Hogfish API.

How often will Hogfish crawl my site?

Hogfish will never send more than 6 requests a minute (1 request per 10 seconds). In most cases its requests will be even rarer. If you think that it is misbehaving or if you think you want it to be even more careful with your servers, don't hesitate to e-mail hogfish@furkot.com.

How do I know that my site is accessed by Hogfish?

Your web server logs will contain the User Agent string:

Mozilla/5.0 (compatible; hogfish/2.0; ...)

To make sure some rogue robot does not impersonate Hogfish run reverse DNS lookup on the request source address using host.

Can I hide my pages from Hogfish?

Absolutely: just use robots.txt file for your site. Hogfish strives to be a good citizen of the digital world. It's not going to wander around where it's not wanted and it will respect your wishes. That said, there is really no downside of having extra links to your site. Before you ban Hogfish outright, please contact us. If Hogfish misbehaves, please assume software problems, and not bad will of its creators.

What can I do to make Hogfish crawl my site?

If you think that your data is useful for Furkot users please send an e-mail to hogfish@furkot.com. We can't promise anything, however there are things that you can do to make Hogfish happy, and thus increase your chances of being in its database.

- Use sitemap or have some other way to list all your pages.

- Use clean, modern, and well structured HTML.

- Use rich microdata tags or open graph protocol to mark geospatial information on your pages.